HARRISBURG, Pa. — Pennsylvania's state Senate on Monday approved legislation that would outlaw the distribution of salacious or pornographic deepfakes, with sponsors saying it will eliminate a loophole in the law that had frustrated prosecutors.

The bill was approved unanimously and was sent to the House.

It comes as states are increasingly working to update their laws to respond to such instances that have included the victimization of celebrities including Taylor Swift through the creation and distribution of computer-generated images using artificial intelligence to seem real.

Under the bill, one provision would make it a crime to try to harass someone by distributing a deepfake image of them without their consent while in a state of nudity or engaged in a sexual act. The offense would be more serious if the victim is a minor.

Another provision would outlaw such deepfakes created and distributed as child sexual abuse images.

President Joe Biden’s administration, meanwhile, is pushing the tech industry and financial institutions to shut down a growing market of abusive sexual images made with artificial intelligence technology.

Sponsors pointed to a case in New Jersey as an inspiration for the bill.

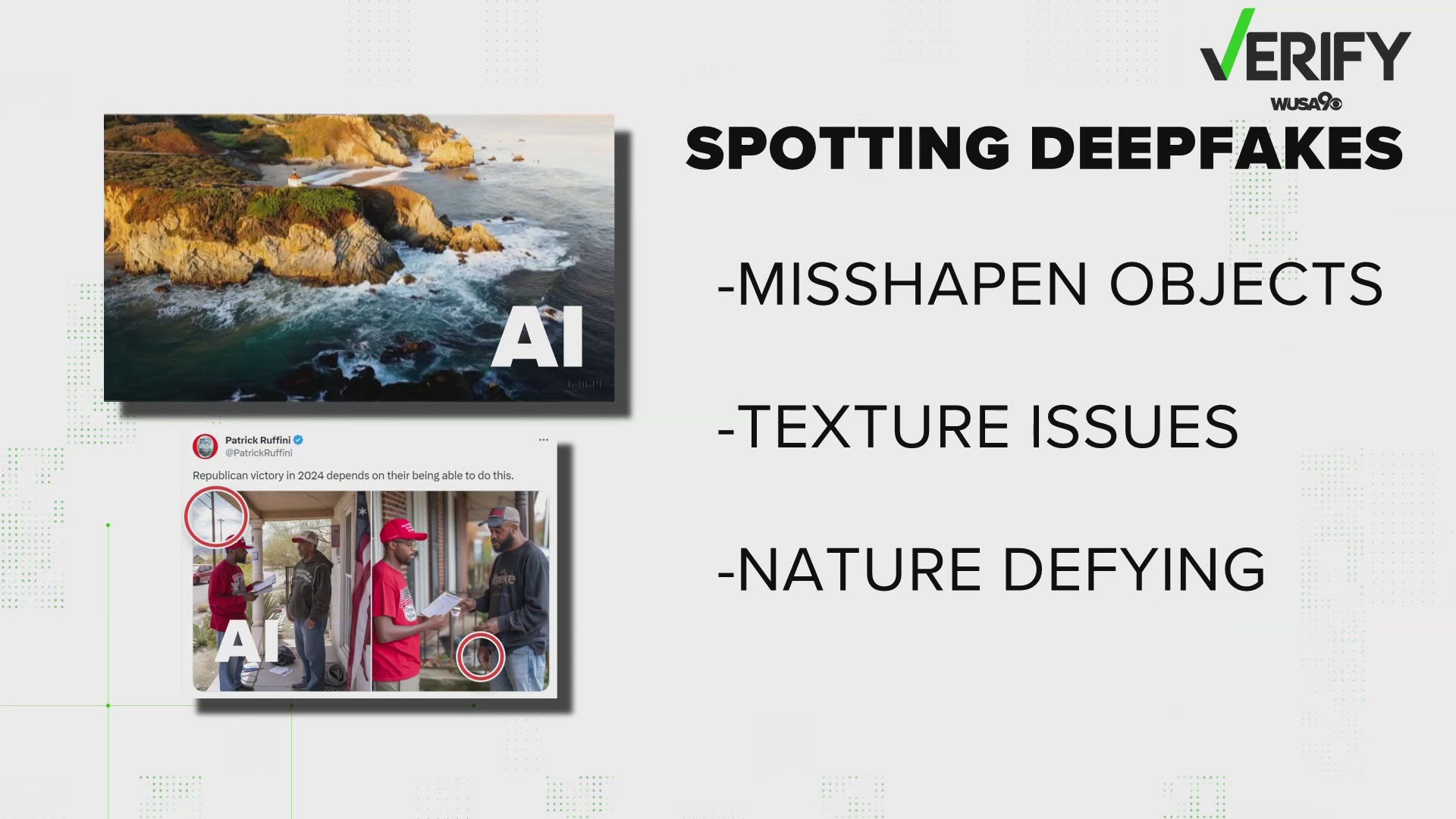

The problem with deepfakes isn’t new, but experts say it’s getting worse as the technology to produce it becomes more available and easier to use.

Researchers have been sounding the alarm on the explosion of AI-generated child sexual abuse material using depictions of real victims or virtual characters. Last year, the FBI warned it was continuing to receive reports from victims, both minors and adults, whose photos or videos were used to create explicit content that was shared online.

Several states have passed their own laws to try to combat the problem, such as criminalizing nonconsensual deepfake porn or giving victims the ability to sue perpetrators for damages in civil court.